Dasymetric mapping is

"a technique in which attribute data that is organized by a large or arbitrary area unit is more accurately distributed within that unit by the overlay of geographic boundaries that exclude, restrict, or confine the attribute in question. For example, a population attribute organized by census tract might be more accurately distributed by the overlay of water bodies, vacant land, and other land-use boundaries within which it is reasonable to infer that people do not live."

-https://support.esri.com/en/other-resources/gis-dictionary/term/dasymetric%20mapping

For the lab this week we focused on dasymetric mapping and I found this definition from esri helpful so I wanted to add it here. This week really focused a lot on basic arcGIS skills with an in depth understanding of how populations are distributed. For the lab we used a raster product of population density or imperviousness to get more accurate estimates for populations in certain areas.

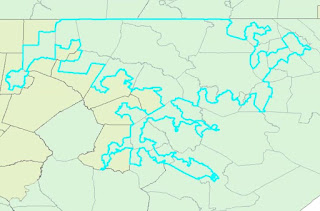

This image is a layout of the census tracts used for the population clipped and erased to remove hydrology features. It is overlayed on the raster of imperviousness to show the population densities. This product along with many statistical editing allowed us to calculate more accurate population estimates.

Sunday, December 10, 2017

Tuesday, December 5, 2017

Special Topics in GIS Lab 14

This week in the lab we focused on the topic of Gerrymandering which is the representation of of a population through skewed congressional districts. We looked at metrics that could be used to measure the skewness of the districts. The two metrics used were compactness and community. Compactness measured how spread out and unnatural the district's shape was while community measured how many counties the district overlapped either completely or partially. For compactness the equation 400pi(area)/(perimeter)^2 can be used to get a value. This equation produces a result from 0-100

where 100 is the best compactness (a circle). The lowest compactness or 0 would be the worst case where the perimeter is very large and the area very small. For

community the measurements were derived from running an intersect to see how

many counties were divided into sections by the districts spatially and how many counties were included

in each district total. Then the total divided counties were divided by the

total counties within the districts to display a percentage of counties

divided. Below are some examples of worst compactness and community scores. The first demonstrates low compactness and the second low community.

Wednesday, November 29, 2017

Special Topics in GIS Lab 13

This week in the lab we worked with scale and resolution. The lab focused on differences between line and polygon features in the first half and raster DEMs in the second portion described below.

To compare the two DEMs I focused on slope, aspect, and overall elevation.

First the slopes of each DEM were mapped and the averages compared. Then for

further analysis the slope maps were combined to display the slope difference between

the two DEMs. Second the aspect of each DEM was mapped and compared to analyze

the slope direction and general direction the terrain was facing. This analysis

gave more of a horizontal representation of the differences rather than

strictly elevation differences. Finally the overall elevation was compared for

the two DEMs. The standard statistics were calculated and compared to determine

the data represented.

The results clearly show that there is a difference between the SRTM

and LiDAR data. Both of the DEMs were analyzed at 90m resolution. The slope of

the separate DEMs seemed to follow the terrain feature of the area which was a

drainage. The LiDAR model had a steeper average slope which revealed itself in

the higher elevations of the terrain feature. The slope difference between the

models is also shown in the visual. The areas where the slope is the steepest

between the valleys and ridges tended to have a larger difference while

relatively flat areas had a smaller difference.

The aspect maps of the DEMs at 90m resolution were fairly similar. The

aspect analysis was meant to look at the models horizontal of directional

facing representations rather than elevation. The results show that even though

he models a very close match there are still some areas that have different

aspects. This shows that the slopes differed between the certain areas enough

to cause the software to display a different facing direction for the certain

area. The differences are slight and nowhere near opposite direction, but if an

accurate representation is vital then consideration should be taken in which

model to use for a study.

Finally the elevation of the two models differs. In the table the

results show that the LiDAR model has a lower average elevation along with a

higher maximum and lower minimum elevation. To me these results show the LiDAR

model as more complete. In the elevation comparison map the areas of largest

elevation difference follow the trend in the slope difference map. They are in

the specific areas of the terrain feature where the valley is transitioning to

a ridge and has a steeper slope. The one interesting result is that the large

differences in elevations are only seen on the southern side of the drainage in

areas with steep slopes. The norther side of the terrain feature shows smaller

differences between the two elevation models. This could be due to the location

of the sensor platforms when the separate model’s data was collected.

The creation of the LiDAR and SRTM both involve air/space borne

sensors. LiDAR can also be collected with ground based sensors, but larger

areas tend to be collected on using airborne sensors. These two sensors are

both collecting elevation data, but one is tens to hundreds of miles in the

air, while the other is less than a mile above the ground. The fact that the

SRTM data is at 90m is impressive considering how high up in space the sensor

is located. Overall I would have to say that the LiDAR model is a more accurate

representation of the drainage feature in all perameters due to the fact that

the sensor is collecting data far closer to the source, the model was derived

from a higher resolution product, and the LiDAR sensor was probably built for

high resolution large scale ground mapping.

Wednesday, November 22, 2017

Special Topics in GIS Lab 12

This week in the lab we focused on Graphically Weighted Regression or (GWR). This type of regression analysis uses the same analysis as the OLS, but takes into account a spacial aspect. For the lab this week we used a county area with locations and types of different crimes. The goal was to select a particular dependent variable and figure out which independent variables are able to describe the dependent variable the best. Using techniques learned from previous labs I created a correlation matrix and exploratory regression in ArcGIS to determine the most descriptive regression was completed using three distinct independent variables. I then used these variables to create an OLS and GWR analysis for comparison. After reviewing the results it was clear that the GWR was more than 10% more accurate in describing the dependent variable than the OLS. This is due to the GWR using the spatial aspect rather than analyzing as a whole.

Monday, November 13, 2017

Special Topics in GIS Lab 11

Using regression analysis in ArcGIS this week was eye opening. Prior to this week the analysis was all done in Excel and displayed in spreadsheets. This week we were able to pull the information into ArcGIS and perform the same analysis in a way that produced a visual representation as well as a summary of statistical reports that help the user analyse the testing. First you need the data for the analysis to be performed on. Then you simply use the Ordinary Least Squares tool in ArcGIS to set your data set, dependent, and independent variable(s) and let the program do the work. The results are provided in an easy to read report that gives all the information you need to decide if the results fit your analytical needs. This week in the lab we worked on interpreting the results. The focus was on the many values that the program calculates to analyse the regression. The tool that you run in ArcGIS even incorporated instructions int he results for reading the reported variables and determining if the result is what you are looking for.

The output of the OLS tool produces a residual map that visually shows the difference the actual data is from an expected value on the regression line. This visual aid can help us determine the accuracy and bias that could be present in the results. For instance if there is a pattern in the results or areas where the residuals are similar in a certain area it could show a bias or non-random correlation. This information is good to have because it could clue you into the fact that you need to use another variable to run the regression analysis.

Finally at the end of the lab (of course) it was revealed that rather than running the tool many times on different independent variables you can simply run another tool that includes all of the variables you wish to analyse and reports the most accurate result possible. The capability of technology today is impressive and makes me wonder what the engineers and analysts will have the software calculating for us next!

The output of the OLS tool produces a residual map that visually shows the difference the actual data is from an expected value on the regression line. This visual aid can help us determine the accuracy and bias that could be present in the results. For instance if there is a pattern in the results or areas where the residuals are similar in a certain area it could show a bias or non-random correlation. This information is good to have because it could clue you into the fact that you need to use another variable to run the regression analysis.

Finally at the end of the lab (of course) it was revealed that rather than running the tool many times on different independent variables you can simply run another tool that includes all of the variables you wish to analyse and reports the most accurate result possible. The capability of technology today is impressive and makes me wonder what the engineers and analysts will have the software calculating for us next!

Monday, November 6, 2017

Special Topics in GIS Lab 10

The lab this week focused on regression and how to analyze data to determine predicted values. The focus this week was in Microsoft Excel rather than ArcGIS. The analysis was formula intensive, but allowed for a better understanding of how to use Excel to gather the data parameters you want for analysis. The Excel program also has a great tool that can be used to accomplish the majority of the regression analysis in one easy step.

This example sums up the processes we used in Excel this week. The goal is to determine if the data points you collect or are given have any correlation and if so how well are the values predictors for each other. In our example two stations reported rainfall annually for a given number of years. One station failed to report for a span of years and it is your job to predict the missing amounts to complete the dataset. In order to do that you perform a regression analysis on the rest of the data to find the slope and intercept value of a "trendline" for the data. This line equation can then be used to enter your x-values from one station and find our y-values for the missing station numbers. The values will not be perfect, but they will be predictions in line with the rest of the data.

Tuesday, October 31, 2017

Special Topics in GIS Lab 9

This week in the lab we learned about accuracy statistics when using Digital Elevation Models (DEMs). In order to accomplish the analysis process you need a DEM to analyse and test elevation points that cover the DEM extent. This analysis is only for the vertical elevation accuracy. We focused on horizontal accuracy in the first part of the semester. For the vertical accuracy statistics we plotted the test points against the DEM then derived the elevation values of the DEM pixels at the test point locations. By comparing the two values we were able to calculate RMSE including the 95th and 68th percentile statistics. The image below shows the result of the vertical accuracy testing. The letters a through e are the different types of land cover represented in the test points. These were provided as comparison factors for the accuracy statistics. The overall accuracy is listed at the end under All.

Tuesday, October 24, 2017

Special Topics in GIS Lab 8

This week in the lab we focused in using interpolation methods to analyse water quality data near Tampa Bay, FL. The data given was point data for water quality levels over a body of water near Tampa Bay. The interpolations we used were the Thiessen, IDW, and Spline methods. All three are used popularly today for many applications. The image below is a representation of an Inverse Distance Weighting (IDW) interpolation.

I like the representation of this interpolation because it is visually pleasing even though it is not always the most accurate of the interpolations. The Thiessen or Nearest Neighbor or polygon (all the same) method basically divides up the area into polygons around the individual data points. This method keeps the integrity of the original data and uses that value for the rest of the polygon area. This method is not a very aesthetically pleasing one, but is more accurate than the IDW at times. Finally the spline method uses trends in the data to smooth out the visual to best represent the data as a whole. There are many ways to set up a spline, but as we learned in the lab they are not always the most accurate and are dependent upon the correctness of the collected data.

Wednesday, October 18, 2017

Special Topics in GIS Lab 7

This week we worked with TINs and DEMs. For those of you new to elevation models the TIN is a network of triangulated elevation points that are laid out in a grid with slope, aspect, and elevation represented. The DEM is a digital elevation model that basically assigns an elevation to a grid area based on the resolution of the area. This week we practiced creating elevation models in ArcGIS and analyzing them. We started with the TIN and found many ways to adjust the symbology of the data to represent exactly view we need from the product. TINs were fairly easy to work with and visualize especially when converted to a 3D image in ArcScene. Finally working with the DEMs we created a slope analysis for a ski resort and were able to display the areas with ideal slope for medium skill level skiers. This document could show the resort the best places to form the next run and what skill level to label it. Below is a screenshot of the DEM analysis in ArcScene. You can see the categorized slope areas along with their aspect and overall elevation.

Tuesday, October 10, 2017

Special Topics in GIS Lab 6

This week in class we focused on Location-Allocation analysis. It involved determining the best solution based on selected criteria for matching locations with central hubs. A good example is many customers in different locations around the U.S. needing service from package handlers like UPS that has central hubs in different areas. The analysis would provide a solution to which customers should be serviced by which hubs.

This week we focused on a solution that matched customers to a distribution center. After the analysis was run we compared the solution to an overlay of the market areas. Some of the customers were being serviced by distribution centers that were not responsible for their market area. To fix the outliers we simply needed to re-designate some of the market areas. The image below shows the new market areas. It only differs slightly from the original market areas due to the small number of outliers. It is interesting to see the simple analysis that we performed this week. It has so many possible applications and could save companies a lot of money by increasing efficiency.

This week we focused on a solution that matched customers to a distribution center. After the analysis was run we compared the solution to an overlay of the market areas. Some of the customers were being serviced by distribution centers that were not responsible for their market area. To fix the outliers we simply needed to re-designate some of the market areas. The image below shows the new market areas. It only differs slightly from the original market areas due to the small number of outliers. It is interesting to see the simple analysis that we performed this week. It has so many possible applications and could save companies a lot of money by increasing efficiency.

Wednesday, October 4, 2017

Special Topics in GIS Lab 5

This week in class we learned a lot about solving Vehicle Routing Problems using ArcGIS. I really enjoyed the week because it involved getting down into the settings of the software to really see what the program is capable of. The goal was to analyse data given for a company that needed to deliver orders to different customers in the south Florida region. The orders were located at a central distribution facility and there were 22 trucks and drivers available to deliver. In the beginning we restricted ourselves to 14 trucks to try and save on costs, but it resulted in many orders not getting delivered and some being delivered late. After the addition of two more routes all packages were delivered and only one was slightly late (2 minutes). The addition of the two extra routes greatly improved customer service and increased revenue. An image including the delivery zones and routes surrounding the central distribution center shows where all of the drop-off sites are located.

The system took in all of the information provided by the user and followed strict constraints to produce the solution that would decrease distance and time costs while stopping at the maximum amount of drop-off sites.

The system took in all of the information provided by the user and followed strict constraints to produce the solution that would decrease distance and time costs while stopping at the maximum amount of drop-off sites.

Wednesday, September 27, 2017

Special Topics in GIS Lab 4

This week we are working with networks. In the lab we created a network and practiced adjusting functionality to see how it would display certain routes. The basic network was just a set of edges and junctions (roads and intersections). Then we added a restriction layer that would not allow certain turns onto some streets from others or if there were traffic lights. Finally we used speed limit data to determine the speed a vehicle would travel on each road based on the time of day. This speed limit also varied depending on which direction you were driving on the certain road.

In every step of the lab we performed a route analysis. With the basic network the route it simply plotted the shortest distance to get through all of the stops from beginning to end. Then the route was run with the restrictions turned on which increased the total distance and time of the route. This is due to the fact that some turns were not allowed so it had to calculate a different route. Finally with the traffic information provided the route was calculated with data for 8:00am on a Monday morning and returned that it would take 122 minutes and cover 103 kilometeres which was again an increase from the route run with restrictions.

In every step of the lab we performed a route analysis. With the basic network the route it simply plotted the shortest distance to get through all of the stops from beginning to end. Then the route was run with the restrictions turned on which increased the total distance and time of the route. This is due to the fact that some turns were not allowed so it had to calculate a different route. Finally with the traffic information provided the route was calculated with data for 8:00am on a Monday morning and returned that it would take 122 minutes and cover 103 kilometeres which was again an increase from the route run with restrictions.

Wednesday, September 20, 2017

Special Topics in GIS Lab 3

The lab for week three involved accuracy statistics of completeness and positional accuracy. The actual lesson and reading focused on point based accuracy analysis versus line based accuracy analysis for line features. Lab two involved our accuracy analysis on the point based approach to analyzing the line features if you want to check that post out. This week the analysis involved the completeness statistic and two layers of roads in southern Oregon. The road layers were placed on a grid system where the layers were intersected to "cookie cut" them. Then the total lengths of the roads were added up in each grid for the two layers. Then a comparison could be made by looking at which layer had more length of road in each grid. This percentage was then displayed in a choropleth map to show the layer comparison.

The overall goal of the accuracy assessment in this lab was to see which layer was more complete by looking at the total lengths of roads in the county and then analyzing them grid by grid. From the product you can see that the TIGER layer seemed to be more complete in the urban areas and the Centerline layer was more complete in the rural areas.

Monday, September 11, 2017

Special Topics in GIS Lab 2

For this lab we looked at the city streets of Albuquerque, New Mexico. The image above is a screenshot of the test points selected for the accuracy analysis. To obtain the accuracy statistics for the layer we had to create a network data set for intersection points within the street layer. Once the points were created a random generator was used to select a number of random points throughout the study area. The selected points were then narrowed down based on the criteria for an appropriate test point. Finally twenty-four points remained. Next the points were compared to more accurate orthophotos. Reference points were placed on the correct or "true" location that the selected points were trying to represent. The distance between these points was used to then calculate the accuracy statistics based on the NSSDA guidelines. The accuracy statement derived for the above layer is:

The depicted layer was found to be the lesser accurate of the two layers tested and did not represent the true locations as well as the other layer.

Horizontal Positional Accuracy:

Tested 243.08 feet horizontal accuracy at 95% confidence level

Wednesday, September 6, 2017

Special Topics In GIS Lab 1

This week it was nice to get back to the grind by working with ArcMap and using the toolbox. We created buffer regions around an average location of fifty GPS points taken by the same device in the same location. The goal was to determine the precision and overall accuracy of the data or device itself. The results can be seen in the following map. I attempted to keep the layout simple and only show the needed data to understand the precision and accuracy aspects.

The point that was made this week in class is that accuracy and precision are two different things. Precision is measured as the distance a point sample is from the average of all of the point samples. The accuracy is measured as the distance the samples are from the reference point or the "True" location. The reference point measured 3.78 m from the average location of the way points taken by the GPS. This shows that the GPS location was fairly accurate. 3.78 meters is about the standard width or length of a bedroom in a normal house. For measurement tools from space that seems fair to call the average way point accurate. The horizontal precision of 4.85 meters seems in line with the accuracy of the average. I would question the results if the horizontal precision was much larger than the horizontal accuracy. Vertically the accuracy is 5.96 meters and the vertical precision is 5.8 meters. Again the numbers are fairly similar and small in scale. In my military experience these numbers would be fine for striking a large building, but if I was looking to strike a vehicle I would have a high probability of missing.

Monday, May 22, 2017

GIS Programming Module 2

This week the lab was focused on writing our own script to run in an attempt to print our last name with the amount of letters multiplied by 3. The following screenshot is the result I came up with without showing the whole script. When I started on the script I was a little unsure where to start off, but once you set a variable the rest flows fairly quickly. I would say most of this lab dealt with a logical flow to represent different numbers and text that the lab asked for. Some of the steps are sort of hidden when only looking at the result. On the screen shot you can only see the printed last name variable and triple count of the letters in the last name.

The process involved a lot more than it seems. First a string was created with your full name, then a list followed by the names split into first, middle, last. From here you were able to select your last name and get a count that you then multiplied by three. The ending goal in the lab was not to simply make the script print what you want, but to make it universal so that you could simply change the full name in the first variable and the script would adjust and display the correct information for that particular name. It was an interesting lab that showed us a basic interface using python and I can't wait to continue with the scripting!

Tuesday, May 16, 2017

GIS Programming Module 1

For our first module in GIS Programming we were introduced to Python and scripting in ArcGIS. For this week we learned how to run a previously written script. The script created a foler for the class this semester and organized them into modules for each week and separate folders within each module for Data, Scripts, and Results each week.

The process to actually run the script in the PythonWin program was fairly simple. I

chose the method of clicking on the Run button in the menu bar. After clicking

on the button a window was opened that asked which script I wanted to run, if I

had any arguments to add, and what debugging options I wanted. I confirmed the

folder script was selected and the argument and debugging options were left at

the default settings. Then a simple click on the “OK” button actually ran the

script. After the scrip was run the window simply disappears and the script is

still open in the script window.

Tuesday, April 4, 2017

Communicating GIS Lab 10

This week for the lab we worked with temporal mapping. For our end products we produced a video file that consisted of a map showing the changes in data over a certain time. Rather than using a comparative map side-by-side the video allows the map creator to chronologically display many maps of data one after the next for a certain length of time. This helps the user visualize the changes and see trends that may be hard to distinguish in the side-by-side comparison. In the first video we experimented with changing population on the major U.S. cities up to the year 2000.

For this video the screenshots show how at different times in the video the data displayed is different and the symbology and labels correspond to the time frame being displayed. These features are accomplished through dynamic and static labeling along with corresponding displayed symbology.

For this video the screenshots show how at different times in the video the data displayed is different and the symbology and labels correspond to the time frame being displayed. These features are accomplished through dynamic and static labeling along with corresponding displayed symbology.

|

| Year 1870 |

|

| Year 1970 |

In the first image you can see the year label 1870 is darkened to represent the current data year. The symbology also reflects the graduated symbol population of the major U.S. cities for the year of 1870. Also at the top right of the map you can see we practiced adding the dynamic text with the "Year: 1870" displayed. In the second image you can see that the data is different based on the symbology for the population and the dynamic labels representing the year 1970 rather than 1870. These images are just two screenshots from the video file. When the video is played it shows the years of data in a chronological order and the populations seem to grow as the years progress. It is a very fancy representation of a large amount of trend data.

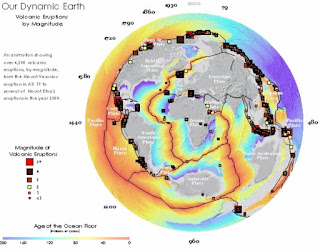

The second video map for the lab worked in the same way except the layout was slightly different. In the screenshots you can see the labels are in different locations representing a part of the whole data rather than a snapshot year. The data builds rather than changing. In this map the number and locations of volcanic eruptions is mapped with the year and magnitude of each.

In these images you can see the building data along with the representation of the years passed going around the globe. Again another inventive idea to represent compiling data in order to analyse trends.

Wednesday, March 29, 2017

Communicating GIS Lab 9

This week for the lab we practiced creating multi-variable maps. In order to do this you have to start with two sets of normalized data that you can represent together in the same area of the map. For our example we have two sets of attribute data associated with the counties in the United States. If you are using a 9-class legend for the choropleth map you should have 3 classes for each of the variables. This will allow you to combine the data into the 9 total classes for the map. The creation of the attribute that you need in the final classification is pretty in-depth into the use of attribute tables in the ArcMAP program, but the basic step is to split each variable into three quantile classes, then add the attributes from each of the variables to crate the final attribute for the classification. In our lab we used 1,2,3 for the obesity variable and A,B,C for the Inactivity variable. So a typical result for a particular county would look like "A3" in the final attribute category.

From here the counties are labeled using a unique values based on this "final attribute" and you have your bivariate choropleth map! The hardest part of making the map is the selection of your colors to represent your classes. You also have to build the 9-class grid from scratch on ArcMAP since there is no feature to do this. In the end the result is worth the effort and it makes for an aesthetically pleasing map.

From here the counties are labeled using a unique values based on this "final attribute" and you have your bivariate choropleth map! The hardest part of making the map is the selection of your colors to represent your classes. You also have to build the 9-class grid from scratch on ArcMAP since there is no feature to do this. In the end the result is worth the effort and it makes for an aesthetically pleasing map.

Wednesday, March 15, 2017

Communicating GIS Lab 8

This week we worked with infographics. The goal was to produce a product using two sets of national data. The data I chose was the percent of population that had attended college and the percentage of unemployment. The data is represented by the county in the two maps of the U.S. and the information is broken into state averages in many of the infographics. The two base maps allow the user to draw their own basic conclusions about areas where there is a lower college educated population and higher unemployment. Then the information about the state averages introduces the top three and bottom three performers for college education and unemployment in the bar graph. On the left side of the product there is a scatter plot with a trend-line showing that in all of the county data there is a sight trend confirming the basic conclusion that can be drawn. Additionally there is summary information provided in the center of the product referencing the total U.S. averages and year prior statistics.

In

finalizing the layout I decided that the design would look cleaner if I

separated each infographic with its own “neat line.” This would cut down on

confusion of legends and data between infographics and help direct the user

where to look for what information. I chose different areas for the

infographics based off where the map data was for the two U.S maps. I used the

spaces that were best fitted for the infographic to help balance the product as

a whole. I chose a dark background color to represent either water or just a

neutral background for all of the information to be overlaid on. I was able to

use normal legend symbology effectively by curving it around the map features.

This helped with the overall balance of the product as well. Finally I added a

title describing the data and year of the analysis.

Wednesday, March 8, 2017

Communicating GIS Lab 7

This week we spent time learning about terrain elevation an how we can visualize it effectively in ArcGIS. We started with elevation data and in this example added land cover polygon data. The elevation data is useful by itself, but it is hard to visualize what is high terrain and what is lower terrain. To give a better picture I executed a hillshade with the standard altitude and elevation to shed a little light on the terrain. With the hillshade you can see the actual ridges and valleys of the area and determine slopes better than the flat gray scale data. Then I took land cover data and adjusted the symbology to show the different types of features in their actual colors (for the most part), some are altered to distinguish between types of vegetation easier on the map.

Finally with both layers displayed correctly I adjusted the transparency of the land cover layer to allow the hillshade layer to be visible underneath it. This creates the illusion that the 3D hillshade is colored with the land cover data. Then by adding the standard map elements and trying to balance the awkwardly shaped area as best as possible, I was able to produce an effective map representing elevation and land cover data in one.

Wednesday, February 22, 2017

Communicating GIS Lab 6

This week we experimented with Choropleth maps. We learned different classification techniques and what each are useful for. I was able to create a few maps to practice my skills with the symbology and color ramps. One of the big learning points for me was the use of normalized data rather than raw data. The normalized data allows the creator to successfully display the information accurately while raw data would have no real meaning being classified.

The culmination of Lab 6 was our choropleth map of Colorado population change between 2010 and 2014. For

my classification I used 6 classes in order to get 0% between two classes. I

chose a color ramp of diverging red and green to show an increase in population

with green hues and a decrease in population with red hues. The 6 classes were

separated using the natural breaks classification. This gave me an accurate

representation for all of the data without skewing the classes. I then adjusted

the middle class to begin at 0%.

For the legend I ordered the classes to show the

increasing percentages at the top and decreasing percentages at the bottom with

their respective symbols. I rounded the percentages to two decimal places since

the percentages were generally small. For the title and description I used the

Population change and the years it occurred.

Wednesday, February 15, 2017

Communicating GIS Lab 5

Proportional symbol mapping can be a useful tool when trying to display numerical data based on certain features. This week we experimented with the symbology and how to effectively communicate data to the user. Proportional symbology allowed us to use different sized symbols to represent specific numerical values for different areas. With the areas being proportional to each other based on the data it made it easy for users to draw conclusions based on the data.

One particularly interesting feature we worked with was how to display positive and negative numerical data using the proportional symbol mapping. In the map below you can see that the same proportional symbols can be used creatively to display a positive value of the data (Green) and a negative value (Red).

I think this type of symbology also grabs the users attention better than using a color ramp. With different sized objects representing data on a map it draws the users attention immediately to the fact that one is bigger than the other. Rather than seeing a specific color and wondering what that color represents the user can identify that one area has more of something or is larger in a number of something than another area without referencing the legend. This could be extremely useful in quick reference products where the title explains the data.

Saturday, February 4, 2017

Communicating GIS Lab 4

This week we are working with color progressions and experimenting with linear vs. adjusted color ramps. The

linear progression makes the most sense mathematically when it comes to

choosing colors in a ranking scale. The darker shades are definitely harder to

distinguish from one another. I did not think they would be as close as the lab

described, but it definitely shows in the color ramps. For this reason I think

the adjusted progression is the most effective. The color ramp clearly

separates the darker shades more than the lighter ones. With my adjustment I

went with the “1/3” rule the lab described and it seemed to work. I was pleased

with the color ramp and would choose the adjusted ramp over the linear one.

Then using colorbrew I selected a slightly greener base. The intervals seemed

to follow the adjusted progression, but were almost random in a sense. They

were generally larger for the darker shades, but had no real pattern.

Tuesday, January 31, 2017

Communicating GIS Lab 3

This Lab focused on Typography. We created a map of the San Francisco Area and practices labeling features. The

font for the entire map is Arial. I chose to keep the same font for all of the

labels in order to keep the map uniform. The only font that was not set to

Arial was the Title font which helped to separate it from the rest of the data.

The font sizes on the product vary slightly to show a hierarchy. The larger

size shows a major city (ex. San Francisco) while the smaller font shows

sub-cities. I attempted to use a font size large enough to be legible, but

small enough to not distract from the rest of the information. The placement

decisions were a little more difficult. Since a dark color was used for park

backgrounds and the roads were so numerous on the map I had to be creative and

us the text halos and contrasting colors. Overall I attempted to place the

labels offset from each other in order to not overlap while allowing spacing

for the normal map elements. For these labels I used the standard text tool on

the Draw toolbar.

This Lab focused on Typography. We created a map of the San Francisco Area and practices labeling features. The

font for the entire map is Arial. I chose to keep the same font for all of the

labels in order to keep the map uniform. The only font that was not set to

Arial was the Title font which helped to separate it from the rest of the data.

The font sizes on the product vary slightly to show a hierarchy. The larger

size shows a major city (ex. San Francisco) while the smaller font shows

sub-cities. I attempted to use a font size large enough to be legible, but

small enough to not distract from the rest of the information. The placement

decisions were a little more difficult. Since a dark color was used for park

backgrounds and the roads were so numerous on the map I had to be creative and

us the text halos and contrasting colors. Overall I attempted to place the

labels offset from each other in order to not overlap while allowing spacing

for the normal map elements. For these labels I used the standard text tool on

the Draw toolbar.

Thursday, January 19, 2017

Communicating GIS Lab 2

The area of interest I chose for our Lab 2 deliverable was the state of Texas. I used the custom projection NAD

1983 (2011) Texas Centric Mapping System Albers for the data. I chose this coordinate system due to the

fact that Texas spans three UTM Zones and is broken up into five state planes. This

central coordinate system is a conical projection with the standard parallels approximately

1/6 from the top and bottom of the state as well as the central median dividing

the state equally. Other options were similar projections of an earlier year or

used the Lambert projection instead. I chose to stick with the Albers projection

to preserve the area of the state. Included on the product are reference grids in geographic coordinates and in projected coordinates. The two sets are show together on the map to compare the measurements and actual lines.

Many products on the military side are represented in a similar way. For instance a 1:50,000 scale MGRS map used for land navigation and aerial planning has a reference grid labeled with the MGRS coordinates. In the background in a separate color there is also a reference grid for Latitude and Longitude so that the user can compare the two based on how they are navigating.

Thursday, January 12, 2017

Communicating GIS Lab 1

For our first map in the class I had some difficulty getting back into the ArcMAP mindset. It was nice getting to go through the program and relearn how to do things. Slowly, but surely I was able to pull my memories together to come up with my finished product.

For this product we focused on the 5 map design principles:

Visual Contrast- For the background of Travis county I used one of the standard land feature colors of light green. By leaving the background of the map data frame white a good contrast is created making the data easy to see. Since the white background is representing a space without data it is an acceptable color. Legibility- The text in the document is all in the same font of Times New Roman. The familiar font and size of the text allows the users to easily understand what the text is saying. The symbols and features on the map are also represented with realistic colors so that the map portion is easily readable. Figure-Ground Organization- The white background and colored county and features helps the user distinguish between relevant information and areas with no data that are not important to the map. Hierarchical Organization- For this element the main map data is displayed in the very center of the document along with a thick border (neatline). The rest of the information on the document has a thin border and is relatively smaller than the main map portion. This shows the user the importance of the main map data frame. Balance- The size, color, and layering of the symbology on the map data balances all of the information as to not overwhelm the user with specific data. The balance shows that all of the data on the map frame is important and even balances well within the entire document.

For this product we focused on the 5 map design principles:

Visual Contrast- For the background of Travis county I used one of the standard land feature colors of light green. By leaving the background of the map data frame white a good contrast is created making the data easy to see. Since the white background is representing a space without data it is an acceptable color. Legibility- The text in the document is all in the same font of Times New Roman. The familiar font and size of the text allows the users to easily understand what the text is saying. The symbols and features on the map are also represented with realistic colors so that the map portion is easily readable. Figure-Ground Organization- The white background and colored county and features helps the user distinguish between relevant information and areas with no data that are not important to the map. Hierarchical Organization- For this element the main map data is displayed in the very center of the document along with a thick border (neatline). The rest of the information on the document has a thin border and is relatively smaller than the main map portion. This shows the user the importance of the main map data frame. Balance- The size, color, and layering of the symbology on the map data balances all of the information as to not overwhelm the user with specific data. The balance shows that all of the data on the map frame is important and even balances well within the entire document.

Subscribe to:

Comments (Atom)